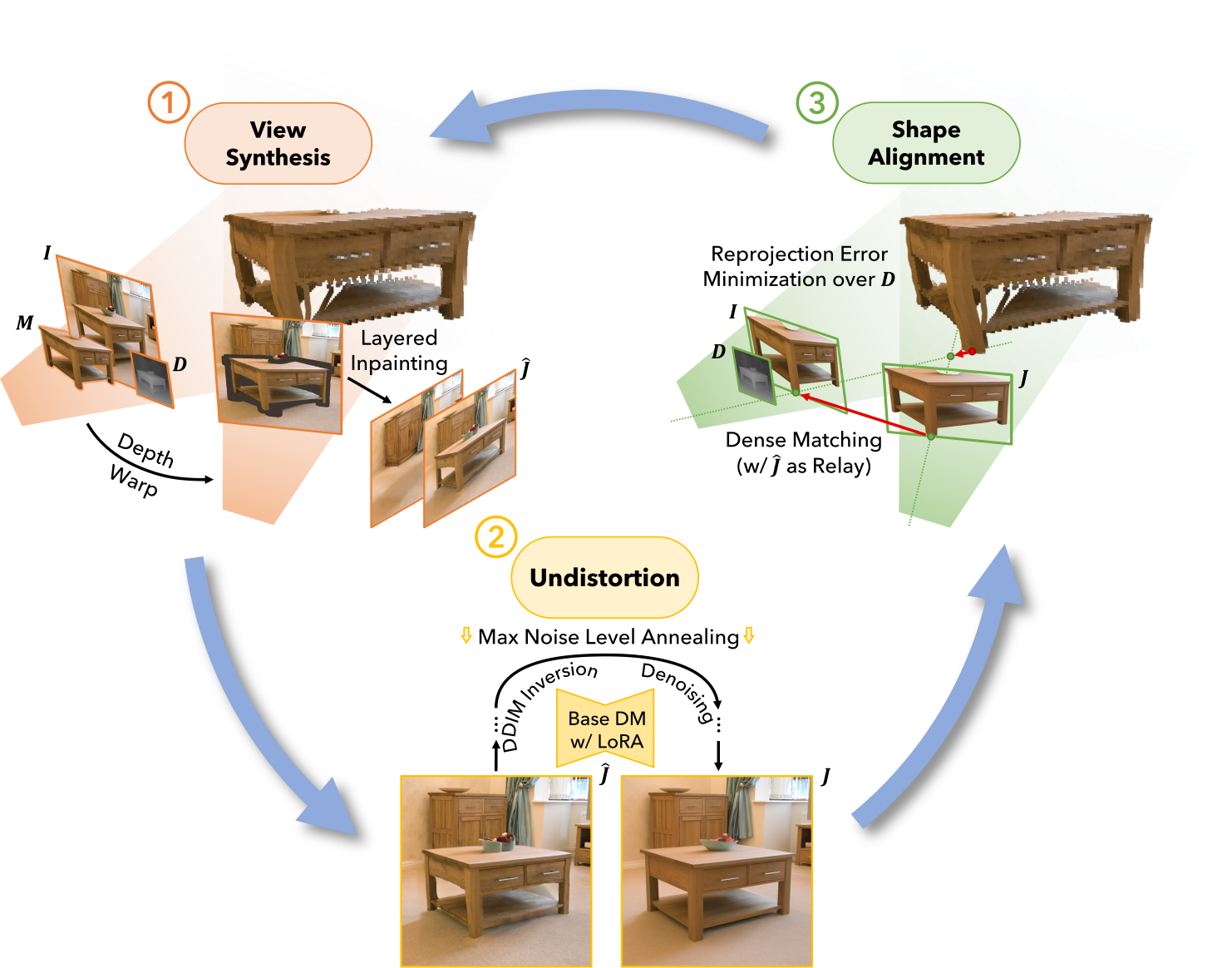

Pipeline Visualization

The overall pipeline. Our 3D-aware editing method iterates among three phases. The view synthesis phase generates the novel view of the selected object using depth-based warping and layered diffusion inpainting (initial depth map obtained by monocular depth estimation). The undistortion phase rectifies the potential distortions on target-view image induced by inferior depth estimate. The shape alignment phase aligns the object shape in the original input image to the undistorted target image by optimizing the depth map and minimizing dense image correspondences. After several iterations, this process yields plausible and consistent 3D editing results.